Trusted Reasoning

Actionable insights at the speed of relevance

ThinkTank Maths’ Trusted Reasoning Architecture (TRA™) is a novel mathematical framework. Intelligent systems based on the TRA™ learn, explain their reasoning and highlight subtle anomalies in sensor data. Applications include command and control decision aids, systemic situation awareness in the oil and gas sector, next-generation spacecraft control systems, and Space Domain Awareness to gain insight, make informed decisions and reduce risk.

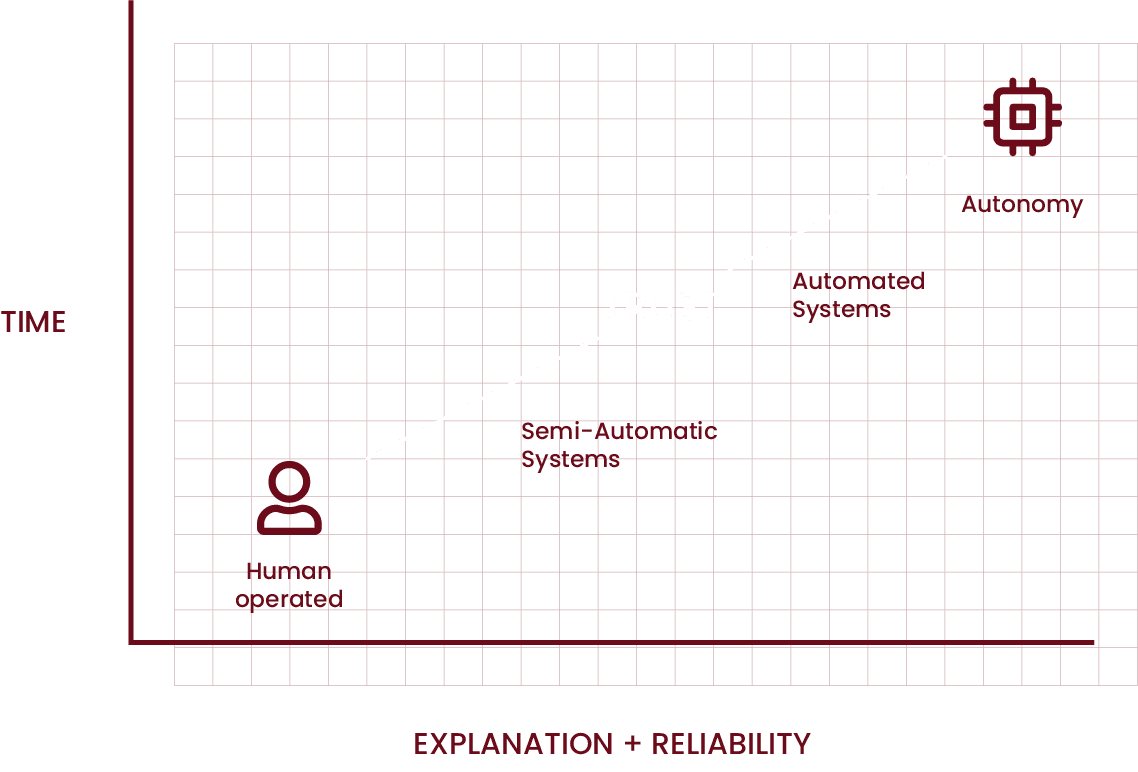

Human beings are increasingly required to collaborate with machines. In the first instance, an artificial decision aid can compensate for weaknesses and biases – or at the other end of the spectrum, enhance human capabilities. For example, in the 2009 Hudson river emergency landing, a smart fly-by-wire system freed up the pilot’s capacity to think and allowed him to fully utilise his years of experience. On the other hand, machines can make surprising decisions or recommendations, creating incomprehension and distrust among the users. The situation is aggravated by the “black box” nature of many automated systems. Operators are left out of the loop until something unforeseen happens – and then asked to jump into the middle of a situation that the system was not designed to handle and to make time-critical decisions on the spot.

Furthermore, the current generation of autonomous or intelligent systems function poorly in dynamic, uncertain environments and fail when confronted with rapid changes — for example, the sudden failure of a system component or a new object in the environment.

For the user to trust the system, the explainability must increase in parallel with reliability. Such explainability can only be achieved via a true two-way dialogue between the machine and the operator: highlighting anomalies, presenting the operator with explanatory key facts and allowing them to instantaneously comprehend the reasoning behind the system’s decisions.

ThinkTank Maths was selected by the SEAS DTC (Systems Engineering and Autonomous Systems Defence Technology Centre, a government-funded research consortium), to address the issue of human-machine trust and to create a novel, rigorous mathematical foundation for explainable decision-making. The project was given the name SUSAN, after Isaac Asimov’s fictional robopsychologist Susan Calvin, whose job is to explain unexpected actions taken by robots. Obviously, enabling human-machine trust in uncertain environments required far more than just explaining machine reasoning, and indeed TTM had to approach the problem of autonomous decision-making with a clean sheet of paper.

One result was TTM’s Trusted Reasoning Architecture (TRA™), drawing inspiration from the human situation awareness model. The core component is the Situation Awareness Engine which recognises situations in raw sensor data and presents their key features to the user. The engine not only highlights anomalies but also learns new situations – and, unlike a human being, detects an underlying situation even in seemingly unrelated, high-dimensional inputs.

A situation-centric approach makes the decision-making process itself more efficient. Depending on the situation, the Meta-Decision Engine chooses the best decision-making algorithm from a toolbox. This allows a wide range of possibilities, from classical planning to the most complex probabilistic or game-theoretic algorithms. In addition, using a decision engine of the most appropriate complexity for the problem at hand results in significant computational savings.

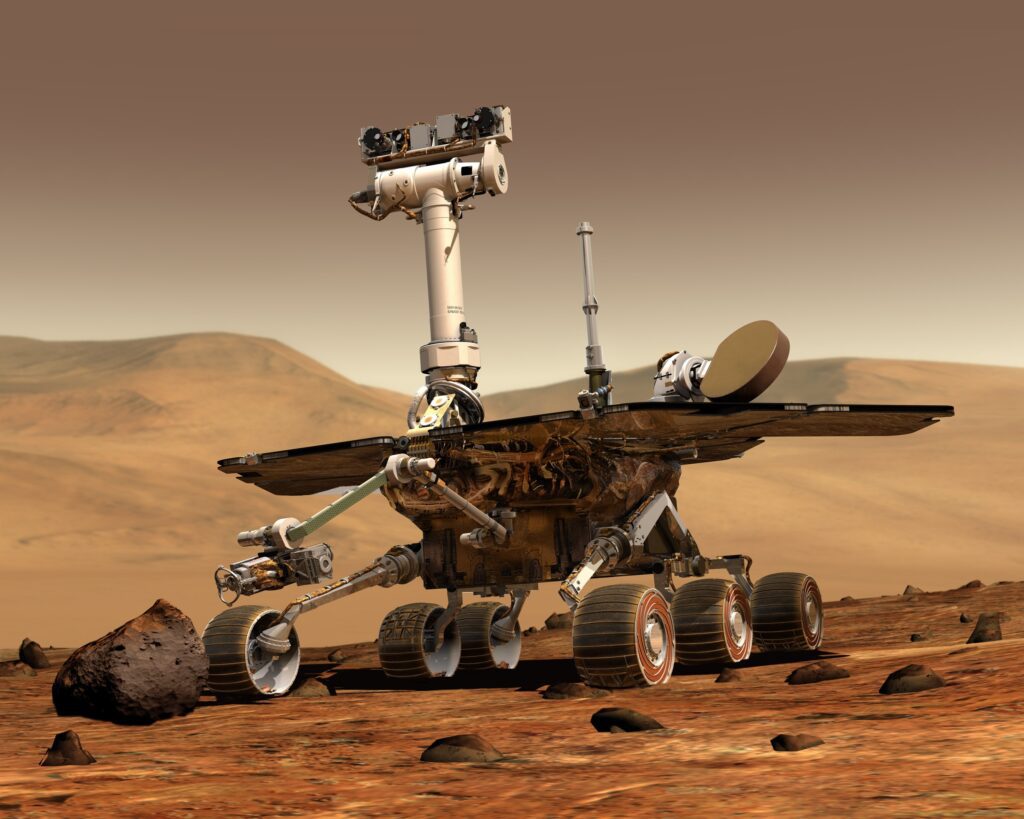

The TRA™ has a wealth of applications. Following initial interest in the emergency services context (e.g. command and control decision aids), ThinkTank Maths has developed this capability in the oil and gas sector for automated geo-steering drilling, and fast-loop production control to detecting system-level anomalies in condition-based monitoring. In the space sector, TTM has shown that the TRA™ has the potential to overcome many of the shortcomings of traditional spacecraft control systems, and Space Domain Awareness to gain insight, make timely, informed decisions and reduce risk.

ThinkTank Maths continues similar fundamental work on autonomy and augmented intelligence. Our mathematical findings will form the foundation of future intelligent systems that help human beings to achieve their full potential.